Behind the scenes: Making a head-turning video with AI

What's the secret to AI and video production? Trial and error. In this behind-the-scenes breakdown, we show you how we used AI (and a lot of human instinct) to bring our campaign video to life—from storyboard to final cut.

A year ago, AI and Taylor Swift were the top two online searches. A year later, while Swift has taken a step back from the spotlight, AI is everywhere—and its creative applications, including short-form video production—are continuing to grow.

That’s why Superside’s Director of Brand & Marketing Creative, Piotr Smietana is helping us share the story of how we created our Q2 2025 campaign video—so you can see where AI shines, what’s still a work in progress and how human creativity will always lead the way.

Backstory: How AI got the gig

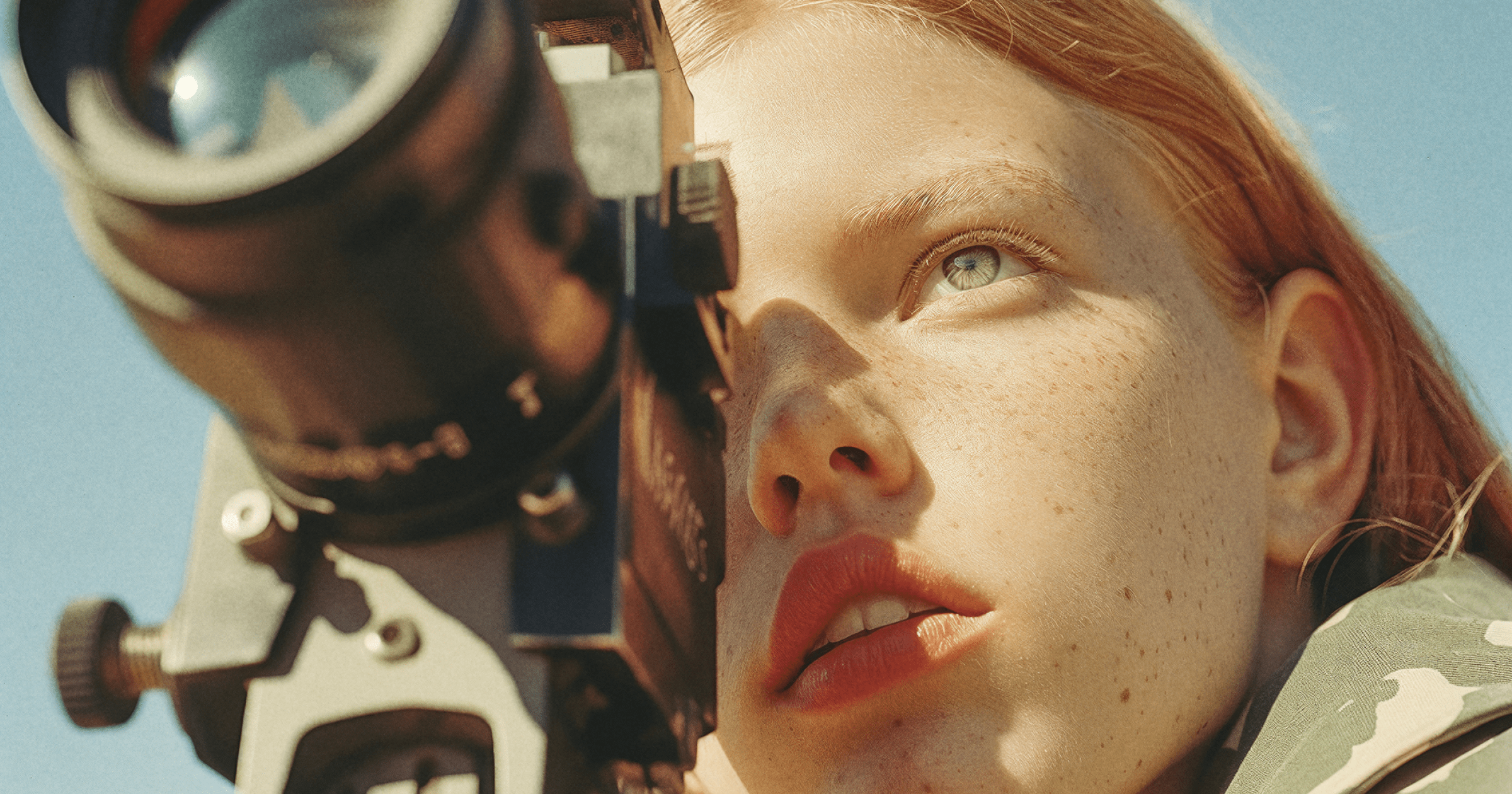

We had an idea for our Q2 campaign video. Rather than showing the work, we wanted to focus on what it’s like when people experience the work. The moment it lands. The subtle lean-in. The breath-held pause.

We also had to get our video produced quickly and efficiently—while staying true to our brand. But, like many teams, we didn’t have a luxuriously long timeline or A-list budget.

Opportunity cost vs. AI opportunity

From orchestrating multiple resources to overseeing an epic list of tasks, the complexity of traditional production would have made this video a no-go.

With AI, we can take the chance. Transforming static images, existing footage or just an idea—into video that turns heads. And we can do this 40% to 60% faster and more cost-effectively.

A multifaceted talent

Video production—even for short-form ad content—is a carefully choreographed series of steps. As you’ll see, in some places, AI can step in and start dancing right along. In others, not so much. Ready? Let’s dance!

Where's the video? We're saving that for the grand finale.

Storyboarding: A mix of lo-fi and AI

I wasn’t thinking about polish. I just needed to know: Does the concept hold up? Is there a rhythm to the reactions? Can we feel the emotional arc?

We started with a story to tell and a blank Figma file. Our core creative insight: Consistency is everything. Scenes that look completely disconnected from each other—different styles, characters morphing, bizarre in-between frames—are one of the biggest challenges with AI-generated video.

To figure that out, we turned to a mix of lo-fi and AI. We used AI imaging to generate rough placeholder frames to build an early storyboard. These early frames weren’t pretty—or consistent. But, they gave us enough to build around, ensure cohesion and determine whether or not the story would land.

Director’s note: The storyboard doesn't have to look good. It just has to feel right.

Scripting: Mostly human, a bit AI

ChatGPT was like having a script assistant who never got tired. But we never let it take over. If the tone wasn’t right, we tossed it.

The first draft was about finding the right flow and emotional weight. Superside humans wrote collaboratively, sharing feedback, cutting lines and tightening scenes so that the pacing matched the modular nature of the short, punchy segments.

To push it further, we used AI for alternate takes on lines. ChatGPT helped us develop more conversational phrasing and even rework transitions.

Director’s note: Let AI help with scripting, but keep humans in charge of tone, pacing and story.

Asset generation: Creating a consistent visual universe

The placeholders got us moving, but now we had to make it ours. That meant building a visual world that felt consistent. Not just nice-looking, but narratively cohesive.

As we moved from placeholder to real-world (reel-world?), we used Visual Electric to generate a consistent visual universe—characters, environments, props.

Once we had the right look, we went frame-by-frame, post-producing each image—adjusting color palettes, correcting lighting and ensuring everything was true to our brand. Pretty pictures weren't enough—we needed a consistent visual language to support the storytelling.

Planning ahead

We also created start and end frame variants of each key scene, which served as anchor points for the motion design—giving the AI video tools something solid to build from.

Director’s note: AI can create images, but creative direction is what turns them into a visual universe.

Motion design: Feeding the machines

We approached this step like old-school animation. Start frame. End frame. Everything else in between? That’s where the AI filled in the blanks.

Armed with our storyboard and post-produced stills, we started generating motion. The start and end frames (AI equivalent of keyframes in traditional animation) were fed into Runway, Google Veo 2 and Kling.

We A/B tested each scene across all three tools, looking for the cleanest transitions, the most intentional styles and the fewest visual artifacts.

The challenge: Current AI video tools tend to break after 5–6 seconds. So we designed each scene to stand alone—modularity that would be very helpful in post-production.

This stage was a mix of experimentation and curation. We ran dozens of generations for some shots—constantly tweaking prompts, durations and inputs

Where AI didn’t cut it, we got scrappy.

Some scenes used stock footage, either as base layers or cutaways. We also started adding traditional 2D animation overlays—text builds, animated transitions and headline reveals. These were created the usual way, in After Effects and Figma—providing further structure and clarity.

Director’s note: AI motion needs structure. Think like an animator and be ready to iterate.

Sequencing, sound and smooth talking

We had a lot of raw material, but no idea how it actually felt when stitched together. The animatic was our first chance to hear the rhythm. To sit with it and see if it moved.

Assembling an animatic—a rough, moving version of the video using all the draft scenes—was vital for establishing pace, rhythm and emotion.

We auditioned music tracks, adjusted timings and experimented with how long to linger on certain shots. Scenes that felt static were swapped out and voice-over timing was tested.

Initially, we thought we’d use AI-generated voice-over. But one of the biggest lessons of the project was that AI still struggles with emotional intonation. Most text-to-speech models sound robotic or overly polished.

To ensure full control over the tone and delivery, we had members of our marketing and creative teams run the lines.

Then, because we’re not trained voice actors, we ran those recordings through ElevenLabs, applying voice model layers after the fact, giving us the best of both worlds—human authenticity and technological polish.

Who made the cut? Senior Director of Product Marketing, Josh Mendelsohn.

Director’s note: AI voice tools are flexible, but real emotion starts with a human performance.

Post-production: Adding the final polish

Once the AI footage, animations, overlays, music and voice-over were assembled in the final cut in Adobe Premiere, we embarked on our final rounds of polish.

Visually, we applied color grading to unify the look across the different visual formats. And because AI-powered video can look almost too clean, we add a film grain to infuse imperfection.

We refined the audio, balancing the voice-over, music, and sound effects, and meticulously trouble-shooted the transitions.

Director’s note: The final layer of polish is where intention shines. It’s what makes the video feel real.

And the crowds went wild... sort of

"You only see the reaction. But that’s all you need.” When he described the concept for the campaign video, Smietana couldn't have been more right.

An immediate top performer, the short-form video ads not only drove conversions, they sparked conversations. Fans and critics both raved about the pros and cons of AI. (Thank you, social media.)

While trained eyes can spot AI-generated content, this blend of reality and creativity is the way of the future, helping teams move faster and take more creative risks. And as our video shows, if your storytelling is on point and your creative eye keen, your results will speak louder than any critic.

No wonder the quality and performance of our customer work made headlines in a Forrester Total Economic Impact™ study, demonstrating how partnering with Superside pays for itself within six months.

Director's note: For an honest approach to AI and video, click on the banner below.

Alex is a freelance writer and newsletter aficionado based in Waterloo, Ontario. When he’s not writing for clients, he’s putting together TL;WR, a weekly culture and events newsletter his mom says is excellent. Alex has worked with some of Canada’s largest tech companies in PR, marketing and communication roles. Connect with him on LinkedIn to chat or get ideas on what to do this weekend in Waterloo.

Ex-copywriter turned content strategist with two decades of creative chaos under her belt. She's helped scale content, brands and frozen pies—yes, really. Now? She empowers creatives to work smarter, not smaller.

You may also like these

5 AI video trends & predictions for 2025 according to our experts

Just a few days ago, Runway launched its Gen-4 AI video model—its most advanced model to date. By the end of 2025, chances are, it’ll already be out of date.That’s the pace we’re looking at when we talk about AI-generated video content. With the speed at which these advances are taking place, there’s no question AI video will transform marketing as we know it. More than 62% of businesses use video in their marketing strategies, and 98% cite it as effective—making video essential for every brand. And now, AI is accelerating that impact.In 2025, advancements in AI technology offer new opportunities for brands to tell their stories more effectively for their unique needs. In particular, AI is making video content production faster, more cost-effective and scalable than ever before.In this article, Darren Suffolk, Creative Director of Video Services for Superside, and Júlio Aymoré, Superside’s Creative Director of Generative AI Excellence, break down the emerging trends shaping AI-driven video creation in 2025.

15 must-see brand video examples for inspiration (2025)

Video truly has killed the radio star—and pretty much every star there ever was in the world of digital marketing.Businesses that fail to get in on the brand video action are missing an enormous opportunity to tell their brand's story, showcase their products and services creatively, build brand awareness, boost engagement, create an emotional connection with their audience and set themselves apart from the competition.Compelling brand videos can also drive higher conversion rates, ultimately contributing to business growth and success.If you’re in need of a dose of brand video inspiration, you’re in luck. Read on to learn about the power of brand videos, what it takes to make a successful brand video, plus lessons in getting the creative process right with our round-up of some of the best brand video examples out there.The power of brand videos in marketing

7 short-form video trends to maximize impact in 2025

Short-form video’s popularity is skyrocketing. Thanks to the likes of TikTok, Instagram Reels and YouTube Shorts (and our diminishing attention spans), the way people consume media has shifted dramatically. These days, people crave bite-sized, engaging snippets of content that entertain, educate and connect us.Short-form video ticks all these boxes. This is why brands are tapping into the format for its high shareability and potential for rapid audience growth like never before.Ready to ramp up your short-form video content? Our end-to-end video services help enterprise brands with everything from strategy and concept to production and iteration—all while leveraging the benefits of AI whenever it’s a fit.Where should you start? Right here. Explore the seven trends in short-form videos shaping 2025 with Superside's Video Production Director, Ashlee Fitzgerald.The rise of short-form video