7 AI Design Trends in 2024 as Predicted by Our Experts

AI has completely changed how designers operate across the globe. From influencing new design processes to opening up new design trends across graphic, motion, web and UX/UI design. It’s a lot to keep up with. Stay ahead of the curve and learn about the 7 most impactful and popular AI design trends for 2024 as predicted by Superside experts.

Just as Da Vinci revolutionized the High Renaissance and Picasso introduced Cubism—we’ve entered a new design era in 2024: AI. For the first time, we’re seeing text-to-interface AI used in graphic and creative design. Big brands like Coca-Cola and Cadbury are even making headlines for their use of generative AI in advertisements.

With over 200 AI-enhanced design projects under our belt, we've witnessed this revolution firsthand. In our latest collaboration with Opa, we leveraged Midjourney to generate mouthwatering food shots, guaranteed to get you hungry.

The reality is that we’re living through a transformative era of AI and design that’s impossible to ignore. So much so that almost every popular design platform available has incorporated AI into their products, like Canva’s Magic Design and Adobe’s Firefly.

The world’s your oyster when it comes to AI Design, which is a blessing in disguise. This is why we’ve taken the time to talk to design experts and industry leaders, including Manuel Berbin, AI Creative Lead, and Phillip Maggs, Director of Generative AI Excellence, who use AI in their day-to-day design processes. Together we’ve dissected the top seven AI design trends to help you stay on top of the ever-changing times and make AI your personal creative collaborator.

A Look into 2024's AI Design Trends

The release of AI was divisive in the design community. This was especially clear following Adobe Max’s 2023 conference where creatives described AI as a “frenemy”. Some felt threatened by its impact on their jobs, while others felt excited by the possibilities.

But as Figma’s VP of Design Noah Levin explains, design has historically evolved alongside technology.

[AI is] there to help and support but it doesn’t replace the need for design.

It’s important to remember that this isn’t the first time we’ve seen a major shift in the way we design. Whether it was the first digital camera, or the newest design platform, changes in technology have challenged the way we work. (And in many cases propelled us forward.) And as always, we adapt, learn and improve the way we design.

All that’s to say that AI doesn’t replace—it enhances. AI is becoming a helping hand for design teams—delivering creative campaigns faster, better and at scale. This is why it’s mission-critical to understand the changes we’re seeing in AI design. Knowing how to work with AI can help you go from frenemies to lovers in no time.

AI-enhanced workflows are the future. There hasn't been this much innovation in the design space in a very, very long time. At Superside, we believe in integrating AI tools, training our creators and empowering them to use these new workflows, which will create incredible outcomes for our customers as well.

7 AI Design Trends Shaping the Future in 2024

Being on top of the latest trends is part of the job. Whether it’s the 2019 trend of Marie Kondo-ing your home (and how that influenced design), or the latest trends of “work smarter, not harder” with AI.

Let’s walk through the most influential AI design trends that will ultimately shape how we approach design this year.

1. AI-powered photorealism

When speaking with AI leaders, Manuel Berbin and Phillip Maggs, we learned that AI images are now being generated more and more through the genre of photorealism.

In fact, AI image generators are shocking users for their ability to produce almost 100% photorealism—seriously, you might get goosebumps. Recent research evidence on AI hyperrealism even proved that some AI-generated images are indistinguishable from human faces.

This trend enables creatives to create more engaging visuals with any setting and subject at a lower cost. So it’s no surprise that using AI-generated photorealistic images in designs is a trend we’ll see in 2024.

The main advantage of AI-powered production, especially for photorealism, it’s the quickening of production times and shortening iterations; allowing creatives to go faster from idea to image and allowing for quick and affordable creative explorations. It takes a lot less time and resources to see if an idea works or to find an idea with the potential to work on.

Real-world examples of AI-powered photorealism

- Midjourney: Same prompt one year apart

The rapid development of AI photorealism is seen in AI design tools like Midjourney that are producing far more hyper-realistic images. You can even see how far AI-generated images have come through this example of someone using the same prompt one year apart:

Midjourney itself has emphasized advanced prompt education and how to produce more photorealistic images for designs. As a creative, knowing how to craft Midjourney prompts is your ticket to faster, more consistent and unique designs.

- AI-generated influencers: imma.gram

Beyond using AI-generated images for ad campaigns or your website, the internet has taken it to the next level by developing internet personalities known as “AI influencers.”

Take imma.gram for example, an AI-generated influencer with over 390,000 followers on Instagram. This virtual “person” has even partnered with several fashion brands, including Adidas, The North Face, and Coach.

Imma.gram isn’t the only one, Shudu, Aitana Lopez, Rosy Gram and Miquela are other up-and-coming AI influencers with millions of followers.

2. AI-enhanced character consistency

Imagine you’ve successfully prompted your dream character using AI. Now you want to apply this character in a new setting, with new clothes—but it looks slightly different each time. Character consistency is one of the biggest drawbacks designers have faced when using AI in Design.

Consistency with AI generations is also one of the biggest bottlenecks that graphic designers have faced over the past few years. That and seeing an unnecessary number of hands when generating people.

Luckily creatives across the internet are sharing best practices on how to achieve consistency in Midjourney and Stable Diffusion using techniques like image prompting, seeding and model training.

As more users continue to experiment and riff on this trend, character consistency will be at the forefront of AI Design techniques.

Overall, AI character consistency is improving rapidly, which is logical as it enhances the value of these tools in production environments. Currently, it appears that we are taking for granted the initial creative phase of our interaction with generative AI tools, where they help break the blank page. However, collectively, we are now exploring and eagerly seeking more ways to integrate them into the actual production stages of projects.

Real-world examples of character consistency

- Lean on more tools: Whatplugin.ai and RenderNet

New tools including whatplugin.ai, RenderNet and community-generated Custom GPTs are emerging from this trend; seizing the opportunity to promise character consistency in designs through features like face locking and multi-model generation.

- Creating Custom Pixar Illustrations with AI in 5 Days

At a recent event, the Superside team was tasked to print 21 Pixar-style posters featuring prospects and customers using AI.

Generative AI Creative Lead Júlio Aymoré shares the importance of using better data sets when generating images. He used a website to match each customer with a celebrity look-alike then fed pictures of these actors into ChatGPT, extracting descriptions to sharpen his image prompts. Through fast iteration and sharp techniques, the team delivered top-quality results while reducing design time by 98.79%.

With 3D modeling, it would have required one month to create just one character. AI tools enhance this process, allowing for better consistency and much faster turnarounds.

3. AI-fuelled motion graphics

Motion designers can now use AI to create hyper-realistic visuals, such as photorealistic 3D models and simulations. What’s most intriguing is the new distinct AI animation style that’s arising. As Manuel Berbin—AI Creative Lead at Superside describes:

There’s a trend in motion and animation powered by AI that doesn’t seem to have a label yet, but it’s an overwhelming hyperkinetic motion that embraces photorealistic yet has an uncanny valley surreal aesthetic.

Creatives are taking advantage of AI’s semi-consistent and inconsistent video aesthetic to create engaging motion designs at a fraction of the cost.

At the moment, 3D and motion design with AI tools is hard. They're going to be the next dominoes to fall that we can start to have impact into those territories. This will mean more of an explosion in creativity and creative possibilities.

Real-world examples of AI in motion graphics

- AI motion graphics with a human touch: Nike Advertisement

James Gerde, an AI animator shared his rendition of a Nike ad made with animatediff and After Effects editing. From the bright colors and surrealistic animations, to imaginative landscapes, he’s nailed the blend of AI motion graphics with human tweaks.

One of the biggest concerns I hear is that the look can be overwhelming, but I believe that we are moving towards a more expressive and hyper-engaging landscape when it comes to AI animations.

- Sora: OpenAI’s text-to-video model

OpenAI’s new text-to-video model can create 60-second clips with impressive accuracy and detail. It can handle complex camera motions, and show multiple characters with vibrant emotions all from a text prompt.

While this news has the video effects (VFX) community divided, it undoubtedly opens new horizons for fast video production. Some even claim that it “helps overcome the problem of the 'white canvas'.

4. AI-driven typography innovation

Times New Roman, Calibri and Arial—the starter pack for all your grade 8 assignments. Classic but predictable. Lucky for us, AI typography is on the rise and we’re here for it.

Historically, AI tends to struggle with generating text in images. But with the new models, text generation in images has been drastically improved (gone are the days of gibberish text!)

In the near future, we’ll see typography get way wackier at a major scale since now it is more affordable to produce intricate results. Minimalism, which was widely adopted as a cost-efficient visual trend, will probably go out of fashion for the moment, as we’ll be collectively drunk on the maximalist possibilities of quick and affordable typography AI generation.

AI is especially powerful when it comes to developing variable fonts; fonts that can adapt and change based on the context. It’s also strong at analyzing a brand’s existing font parameters and reproducing similar typography.

While this development is exciting, the outcome of AI-generated fonts is challenging to control and rarely factors legibility, readability and consistency. It also lacks fundamental human design principles which are critical to typography design. So while AI is a strong creative companion to get inspiration for your first draft, the coexistence of human creativity and AI makes for the best results.

Real-world examples of AI typography

- Generate new fonts: Appy Pie

Appy Pie’s AI Font Generator gives designers granular control over their font generation including letter shapes, stroke thickness, spacing and curves. This gives designers and typographers control over their brand identity and style while incorporating the power of AI to push the boundaries of their creativity.

- AI Images with text: Deep Floyd

Stability.ai is directly improving the way that typography appears in images with their new generative AI: Deep Floyd. The coolest part? It’s capable of rendering perfect typography in any context or scenario imaginable.

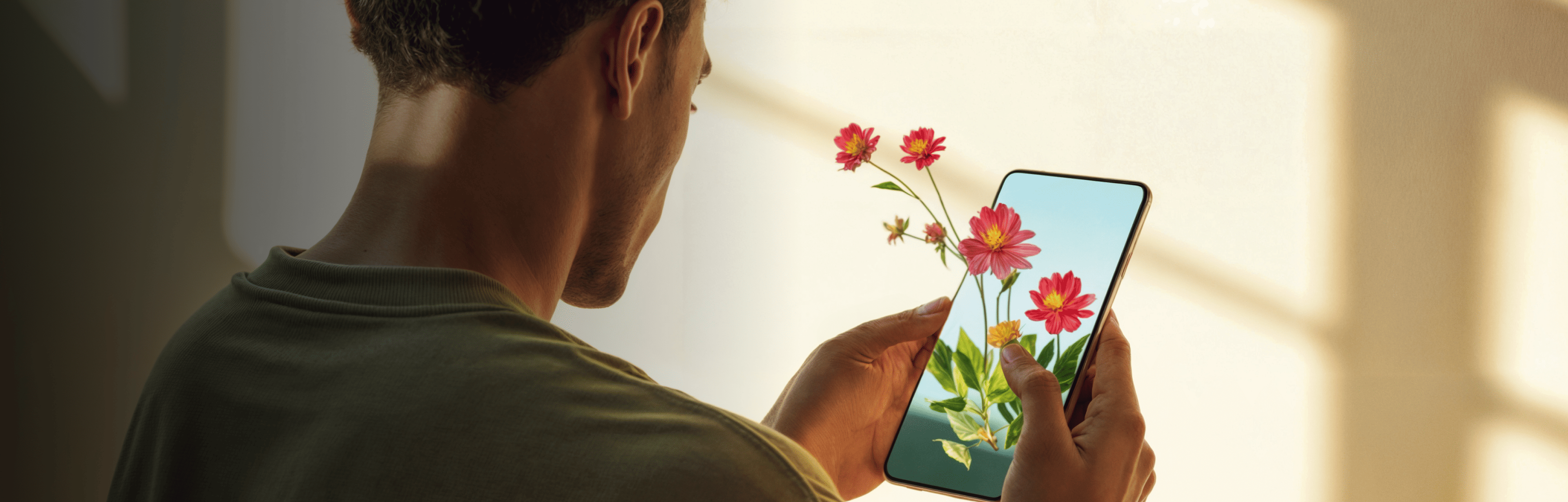

5. AI, VR and AR synergy in web design

With Apple’s latest Vision Pro taking over the headlines, VR and AR technology are predicted to be heavily integrated into our day-to-day lives. While it’s still new, we predict that web design will heavily benefit from this as businesses can showcase products and services in an entirely new channel.

In our latest Creative Leadership Assembly, hosted by Phillip Maggs, Dan Moller, AR Specialist at Meta, shared his thoughts on how AI and AR technologies are closely related.

AR and AI are not two separate things, they’re very closely related. Almost all AR experiences require AI to operate - to detect your face, to fit glasses to the contours of a face. There are so many other capabilities AR can have, and it all depends on AI to operate.

Using AI to accelerate VR and AR creation unlocks multiple use cases, from VR-based virtual trial rooms to AR interactive try-on experiences for eCommerce platforms.

AI is becoming more and more valuable for making AR apps better and getting started with AR. Dan pointed out that AR experiences need AI to do things like recognizing faces, adjusting glasses to fit your face shape, and understanding what's around you. So, while AR is like the front part of these apps, AI is the powerhouse behind them. And in 2024, we'll see AI used even more.

AI will be crucial in powering deeper levels of contextual understanding and creating AR experiences that are even more useful and relevant.

Real-world examples of VR and AR in AI-powered design

- TensorFlow for AI

New tools and frameworks are available for integrating AI, VR and AR into web development. A-Frame for VR, AR.js for AR, and TensorFlow for AI are just a few examples of tools that developers can use to create sophisticated and interactive experiences.

- Webflow

As AR, VR, AI and voice interaction technologies become more integrated into web design, there are big bold bets that Webflow will adapt by incorporating these technologies into its platform. This could look like new features built natively into Webflow to create immersive experiences that enable AI-driven personalization and voice search. They’ve already started curating AR website templates on their marketplace!

6. Deepfake tech and AI in design

Deepfakes are an AI-based technique that synthesizes media and superimposes human features on another person’s body to generate a realistic human experience. Believe it or not, some media and large brands are already using Deepfakes in marketing campaigns. However, this trend is tricky as there are tons of legal repercussions and copyright barriers that apply to Deepfakes.

I envision a scenario resembling "The Congress (2013)," where people license their faces and voices. Deals will vary based on market position, willingness to sell identity for profit, legal support and perhaps the desire for eternal youth in the public eye. This is already happening–people are licensing their faces and voices for fake “homemade influencer videos.”

Navigating legal nuances with creative work can especially be challenging, but important considering most of us don’t want to be sued. That being said, there are several useful applications of Deepfakes including enhancing talent skill sets, incorporating celebrity features in digital content and even augmenting video game characters.

As the right legal guardrails start developing, Deepfakes can make major headwinds in marketing and graphic design work.

Copyright law will naturally evolve to address emerging needs, especially regarding the affordable possibilities of "face/voice usage, profiting, and exploitation." I foresee a surge in both copyright law development and infringement. Hopefully, figures like Taylor Swift fighting misuse of their face and IP will set precedents, leading to significant lawsuits that compel major AI players to establish a reward system for affected illustrators and artists.

Real-world examples of Deepfake technology in AI design

- Shah Rukh Khan’s Cadbury Ad

Cadbury partnered with Oglivy to craft a campaign to promote local businesses featuring the Bollywood star Shah Rukh Khan. The premise of their campaign was that small businesses rarely have the budget to hire celebrities to endorse their products. But, with Deepfake technology, that was no longer a barrier through Cadbury’s “Shah Rukh Khan-My-Ad'' campaign.

- David Beckham’s Malaria No More Campaign

Text-to-video platform Synthesia went viral when they launched their “Malaria must die” campaign. This campaign featured Malaria survivors (spanning nine languages) speaking through David Beckham. The team at Synthesia was even kind enough to share a behind-the-scenes walkthrough of how they did it:

7. AI-driven hyper-personalization in UX and UI Design

As the UX Institute summarizes, “With advancements in AI technology, personalization is now reaching unprecedented levels of complexity and sophistication. Cue the era of hyper-personalisation.”

AI empowers designers to create interfaces that adapt and cater to each user. Starting with a UI/UX audit can help to identify opportunities to further personalize user experience and boost user engagement.

Real-world examples of AI-driven hyper-personalization

- Spotify Wrapped

When Spotify Wrapped first launched, its personalization capabilities were basic, it relied on simple algorithms such as user listening history, favorite artists and manually curated playlists to generate recommendations.

Now with AI, Spotify Wrapped includes “listening personalities” and predictive playlists.

- SoraAI

SoraAI OpenAI’s text-to-video platform enables persona-based video creation. This new development lets users bring personas to life and simulate what it’s like for them to use a product. Through powering realistic virtual videos, businesses can go from zero to hero on their personalization game. For designers—it also gives a new dimension to how to visualize and prototype complex user experiences in an immersive and dynamic way.

Get Ahead of the AI Design Curve With Superside

As the AI Design landscape rapidly evolves in 2024, staying ahead of the curve is essential to seizing new opportunities and embracing creative experimentation. If you’re looking to navigate AI Design in your team’s workflow, our in-house experts have been in the trenches and can be your strategic partner in crime. Let’s embrace these trends together and trailblaze this new era of design.

Who knows, we might be the Da Vincis of 2024.

Hiba Amin is a Contributing Writer at Superside. As a marketing leader who lives and breathes content, she's had the privilege of heading up content teams and has also been in the trenches as a marketing team of one. She's worked at a wide range of tech companies across PLG, SaaS and most recently, AI. Say hi to her on LinkedIn (and ask her about her dog, Milo!).

You may also like these

AI adoption trends: What 190 creative leaders said

Remember a few short years ago when generative AI was the shiny new buzzword?Now the hype is settling and excitement is more tempered as creative teams shift from early experiments to deeper integration—aiming for real mastery and ROI.The problem isn’t convincing anyone that AI is worth implementing. It’s finding the right ways to do so. When we launched our AI Adoption Guide, we included a quiz that let creative leaders assess their AI readiness and adoption journey.To get a pulse of what’s happening on the front lines within working creative teams, we reviewed the responses we received from 190 creative leaders. Here’s what they said.Where creative teams stand

The Creative Struggle for Meaning in the Age of AI Adoption

Imagine being a painter in the 1840s, with people traveling for weeks to be immortalized by your delicate brushwork. As you finish your latest masterpiece, adding final details, a commotion catches your attention.Outside, people marvel at a new invention—a sturdy black box that captures reality in minutes, with more detail than any painting could achieve. You feel a shiver down your spine. If just pressing a button can do what you can, what are you here for?AI adoption has come to disrupt not only every industry, from medicine to finance to marketing, but also our daily lives. Large Language Models (LLMs) began as thought partners, capable of generating text in any language, tone or style, offering fresh ideas, planning support or even challenging your thinking. Then, other types of Gen AI like image generation, voice, music and video enhancement and other tools, emerged. What used to take long hours or days (maybe months) and specific skills, is now achievable by knowing how to prompt effectively and trial and error.Our AI Consulting Team surveyed over 800 creatives from 80 different companies and more than 10 countries. We listened to their thoughts and concerns on this new wave. By now, we have a much clearer understanding of how they think and feel about their work, and the changes AI is introducing into their workflows and their lives. Here are some of the results.

Beyond the Brief: All the Buzz About AI-Powered Ads

Global agricultural technology leader, Syngenta uses science to advance crop production. However, even the most cutting-edge innovations must work in harmony with nature. Attracting pollinators, like bees and butterflies, Operation Pollinator helps boost crop yields.But what does this have to do with using AI for ad creative? When guided by human ingenuity and expertise, AI is a catalyst that lets you quickly explore and refine ideas.Learn how partnering with Superside helped Sygenta speed concepting and nurture the storytelling of these groundbreaking ads.The Brief: Promoting Operation Pollinator To kick off the project, Syngenta shared an existing Operation Pollinator explainer video. There were no static images for this initiative, which meant the creative team was starting from scratch. Vidrio and Montelongo had a blank slate, a lot of freedom and only two days to complete the entire project.